Hacking: Control Systems Are Not Immune

Almost every day we read about a cybersecurity breach of a major bank, retailer or government computing system.

#iiot #automation

In the last few years, we have begun to learn more about successful hacking attacks against industrial control systems (supervisory control and data acquisition systems and distributed control systems). Many people who work in control system environments wonder if these systems are really vulnerable to hackers, and if they are, who would attempt to hack them.

In this article, we provide a basic working knowledge of the issues involved that can help protect control systems and hopefully inspire readers to seek out more information on the topic.

THE CURRENT THREAT

In the early 2000s, a key leader in the industrial control system community proudly stated that “control systems are not hackable” primarily because they are “built upon proprietary operating systems and software that hackers are not familiar with.” The leader pointed out that these systems typically have very little or no outside connectivity.

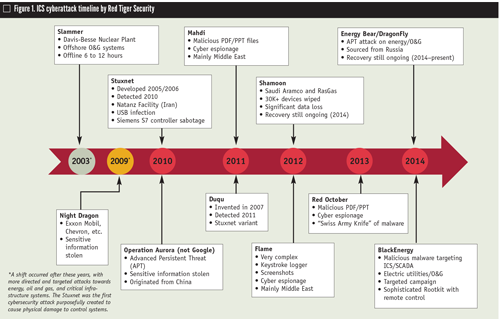

The last few years have demonstrated, however, that controls systems are indeed vulnerable to system compromise. To understand how we’ve come to this point, we’ll look back at the recent history of successful attacks on control systems to better understand the current threat.

A BRIEF HISTORY

In January 2003, computers at the Davis-Besse nuclear power plant were infected with the Slammer worm2—a piece of malicious code that spread faster than any before or since. This particular infection is noteworthy for two reasons: First, the code was only able to spread because computer owners did not apply security patches in a timely manner. (Microsoft had released a security patch to mitigate the vulnerability six months before Slammer began its historical spread). Second, during the ensuing investigation, personnel at the plant indicated they thought they were protected from such attacks because they had a firewall. Unfortunately, this lack of understanding with respect to cybersecurity is still prevalent—especially in the control system community.

Today, most knowledgeable individuals readily admit it is possible to successfully hack control systems. However, the belief that even if a control system is hacked, no real damage will occur because of safety features incorporated into the system also prevails. There is just enough truth in this belief to make it a dangerous one. While safety features in many modern control systems do make it more difficult to cause damage, they do not make it impossible. Furthermore, because the average lifecycle of a control system can be 15-20 years, many antiquated systems are susceptible to compromise and may not have some of the more modern safety features.

In September 2007, Cable News Network ran a television segment about the Aurora Project—a control system security evaluation conducted by the U.S. Department of Energy’s Idaho National Lab. During the evaluation, researchers demonstrated the ability to completely and stealthily take over a control system and use this control to push a highly expensive generator far beyond its operational limits, resulting in catastrophic failure of the generator3. The Aurora Project represents an important milestone in control system history because it proved that control systems can be successfully compromised by cyberattack to the point that the attackers have complete control and can cause significant and costly damage to equipment and to operational capability.

That brings us to Stuxnet, one of the most important milestones in control system cyberattack history. Some may not be aware that Stuxnet was a sophisticated cyberattack perpetrated against the Iranian nuclear program. This attack successfully compromised and caused significant long-term damage to the Iranian nuclear program by causing centrifuges that make nuclear materials spin out of control while erroneously displaying normal behavior on operators’ consoles and suppressing alerts. Two important facts came out of Stuxnet:

- Stuxnet successfully compromised a sophisticated control system apparently not connected to the Internet in any way (it’s said to have arrived via a USB drive).

- Forensic data indicated that earlier versions of Stuxnet date back as far as 20054.

In looking at the entire history of hacking, it is important also to understand many people hold misconceptions of who is hacking control systems. They think of hackers as pimple-faced teenagers typing away at home computers (as in the “War Games” movie of 1983). The truth is more alarming. We now know that nation states such as China and Russia are actively and systematically engaged in cyberattacks against U.S. control systems.

The U.S. Department of Homeland Security is quoted in a recent news account as saying, “Chinese hackers targeted 23 natural gas pipeline companies over seven months beginning in December 2011, and breached at least 10.”5 The same news report also noted that FBI agents have recorded raids by other operatives in China and in Russia and Iran looking for security weaknesses that could be employed to disrupt the delivery of water and electricity or impede other functions critical to the economy.

The important points to take away from this brief review are:

- Even industrial control systems that have a firewall or those not connected to the Internet are vulnerable.

- Hackers are not just individuals; they are also nation states.

ANATOMY OF A HACK

While the press of recent years has raised public awareness, a great deal of misinformation and misunderstanding remains regarding how cyberattacks occur. In fact, even some people involved in cybersecurity have no idea how such attacks actually occur.

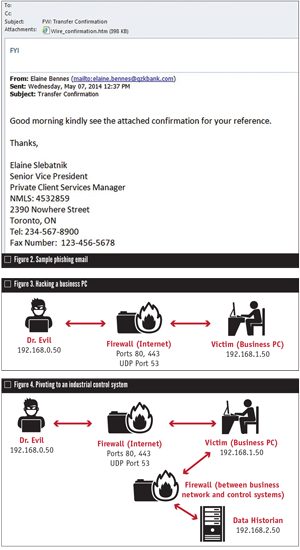

Most successful attacks fall into two categories: client-side attacks and misconfiguration-based attacks (which include unpatched systems). Client-side attacks are typically aimed at end users. They are the most common type and widely thought the most likely to succeed.

Following is a sample, four-phase attack based on a live hacking demonstration using a software program (MetaSploit) that is easy to use and efficient, even for hackers with little or no programming skill.

Phase 1—Infiltration via the Internet

Once unsuspecting victims click on a link or open an attachment, they are connected to a website that automatically downloads and runs malicious code. Once the code has run, the hacker has complete control of the victim’s PC (Figure 3).

Many people would wonder if a properly configured firewall would block this attack. Most likely, it would not because the attacker (hacker) has convinced the victim to click a link. The connection to the Internet (and to the hacker’s computer) was initiated by the victim from a trusted internal network (the victim’s company). The firewall usually won’t block the traffic because it originated from a trusted source and was on a port (in this case TCP 443) used for virtually all encrypted Internet connections.

Also, because the connection between the victim’s PC and the hacker is encrypted, any security systems, including intrusion detection systems or firewalls, are blind to the contents of the communication. Therefore, those systems cannot detect malicious code or activity within the conversation.

The result of phase 1 is that the hacker now “owns” a computer on the internal trusted business network of the target victim company.

Phase 2—Pivoting toward an industrial control system

If the ultimate purpose is to find and assume command of the control system of the target company, once an adversary has taken control of a business PC, he or she likely will look for ways to compromise other systems. In hacking terms, this is referred to as “pivoting.”

In this particular example, the hacker notices the victim PC is connected to a system named “Data Historian,” which might provide information concerning activity within the control system environment. This would make it an obvious juicy target, so our hacker uses the victim business PC to attack and take over control of the Data Historian computer (Figure 4).

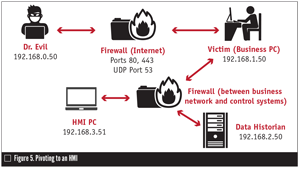

Phase 3—Pivoting to a human management interface or HMI (an operator’s console)

With only a basic knowledge of control systems, our attacker can easily determine that the Data Historian computer is an intermediary that receives historian data from a control system computer and provides it to computers (such as our victim PC) on the business network. Consequently, with a little digging around, he determines that the Data Historian computer is connected to a computer within the control system environment named HMI PC.

Using his existing control over the Data Historian computer and the fact it already has a connection to the HMI PC, the attacker then connects to and takes control over the HMI PC.

The more technically savvy may be able to identify ways to defend against this type of attack. Still, the methodology is realistic in that many systems could be successfully compromised using this method. Of course, if any of the steps in this method fails, an experienced attacker would simply try other avenues until he or she achieved success.

COMBATING THE THREAT

As awareness of threats has grown, the number of resources allocated to deal with the issues has increased exponentially. Unfortunately, simply putting more money into the problem does not guarantee a corresponding stronger defense. According to Art Gilliland, HP enterprise security, “We’re spending something like $46 billion a year on cybersecurity but the percentage of breaches is increasing by 20% per year, and the cost of those breaches is increasing by 30%6.”

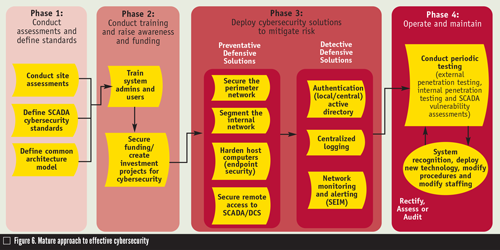

The key to an effective cybersecurity program lies in a methodical and systematic lifecycle approach. That approach might look like Figure 6 with these steps:

Phase 1—Conduct assessments and define standards.

The development of a comprehensive and effective cybersecurity program requires knowing where a company is and where it’s going. Site assessments show the strengths and weaknesses of the current cybersecurity stance and help to identify opportunities for improvement. Development of cybersecurity standards and a common architecture provide a concrete framework to use to move toward a target cybersecurity stance.

Phase 2—Conduct training and raise awareness/funding.

To develop and maintain a strong cybersecurity stance requires a technical team that understands and practices good cybersecurity throughout the environment. Good cybersecurity is simply not possible without proper funding. Therefore, it is imperative to get key stakeholders’ support.

Phase 3—Deploy cybersecurity solutions to mitigate risk.

Two important points need to be made for this phase: First, effective cybersecurity is always about one thing—mitigating risk to the success and well-being of the business. Second, good risk mitigation is about both preventative and detective cybersecurity measures. According to Gartner research, “too much information security spending has focused on the prevention of attacks and not enough has gone into security monitoring and response capabilities.”7 A balanced approach includes both preventative and detective measures.

Phase 4—Operate and maintain.

Good cybersecurity is a continuous, iterative process that is incorporated or “baked in” to all related processing, including design and system configuration development and deployment, configuration management and lifecycle management. Periodic penetration testing is necessary to validate that the company is still on the right track and that no new vulnerabilities have been introduced into the environment.

CONCLUSION

Modern industrial control systems are increasingly connected—either directly or indirectly—to the Internet. More than ever, adversaries have sufficient knowledge, funding and resources to devote the necessary time and effort to successfully attack and compromise controls systems.

HMS says that: “The national effort to strengthen critical infrastructure security and resilience depends on the ability of public and private critical infrastructure owners and operators to make risk-informed decisions when allocating limited resources in both steady-state and crisis operations8.”

Armed with the information in this article and with a systematic approach to effective cybersecurity, companies can make appropriate decisions on how to mitigate risk in control system environments.

Chris Shipp is chief information security officer, Fluor Federal Petroleum Operations, a contractor to the U.S. Department of Energy. Shipp is working within the cybersecurity community to develop and implement better information security within industrial control system environments. Reach him at cshipp@bluenight.us.

Jonathan Pollet is the founder and executive director of Red Tiger Security (www.redtigersecurity.com). He was one of the first to publish white papers that exposed the need for security for SCADA/Industrial Control Systems technology and has led security teams on over 250 assessments, penetration test and red team physical breaches. Reach him at jpollet@redtigersecurity.com.

References

1. http://threatpost.com/cyberattacks-most-imminent-threat-to-u-s-economy/109039

2. www.securityfocus.com/news/6767

3. edition.cnn.com/2007/US/09/26/power.at. risk/index.html

4. www.scmagazine.com/rsa-2013-symantec-shows-proof-that-stuxnet-has-been-striking-since-at-least-2007/article/281979/

5. www.bloomberg.com/news/2014-06-13/uglygorilla-hack-of-u-s-utility-exposes-cyberwar-threat.html

6. www.smh.com.au/it-pro/security-it/billions-spent-on-cybersecurity-and-much-of-it-wasted-20140403-zqprb.html

7. Prevention Is Futile in 2020: Protect Information Via Pervasive Monitoring and Collective Intelligence, MacDonald, Gartner G00252476

8. NIPP 2013, Partnering for Critical Infrastructure Security and Resilience, DHS, p. 1

RELATED CONTENT

-

Misconceptions Regarding Control and Isolation Valve Standards

All on/off isolation valve standards are not created equal and cannot be applied unilaterally to all valves.

-

Process Instrumentation in Oil and Gas

Process instrumentation is an integral part of any process industry because it allows real time measurement and control of process variables such as levels, flow, pressure, temperature, pH and humidity.

-

Five Reasons Why the Right Strategy Leads to the Success of Digital Transformation Implementation

The past few years have proven that digital transformation (DT) is more than just a trend. It is becoming one of the frontrunners for ensuring business continuity through modernizing times. However, despite being an essential component for adaptability in a competitive industry, not all companies would consider their DT implementation a success.

Unloading large gate valve.jpg;maxWidth=214)